Can the Smilegate-Raised Graduate Students

Open the New Horizon of Artificial Intelligence?

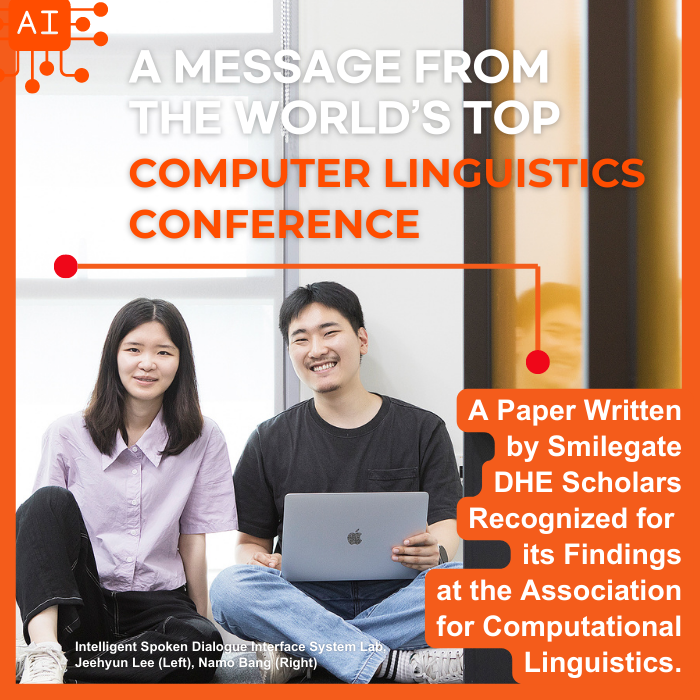

A paper written by graduate students at Sogang University Digital Human Entertainment (DHE afterwards) major was accepted for findings at the world’s top computer linguistics conference. The co-authors Namo Bang and Jeehyun Lee received international attention for both having a liberal arts degree. We interviewed the two students about the paper and the merit of DHE program.

A message from the world’s top Computer Linguistics Conference

While research on AI is becoming more and more active around the world, a promising message was delivered to Sogang University AI center. A paper written by two Smilegate DHE scholars was recognized for its findings at the world’s top computer linguistics conference, ACL.

* ACL: Association for Computational Linguistics

ACL is the world’s top computer linguistics conference which has the highest tier in all various computer science conference rankings. The rank is determined according to the impact history of each conference.

Intelligent Spoken Dialogue Interface System (ISDS) is the title of a lab led by professor Myoung-Wan Koo at School of Artificial Intelligence, Sogang University. Bang and Lee, students in the ISDS lab, both contributed equally to the paper. The paper is published in the conference handbook that will be available at the 61st Annual Meeting of the ACL (ACL 2023). The conference takes place in Toronto, Canada from July 9th to July 14th, 2023.

Even AI can be forgetful because of new task

Introducing Task-Optimized Way of Learning

The paper titled “Task-Optimized Adapters for an End-to-End Task-Oriented Dialogue System” talks about adding an Adapter module in the Large Pre-trained Language Model (LLM) with fixed parameter and training the Task-Oriented Dialogue System with Reinforcement Learning. The adapter understands what the user says, tracks the status of the conversation, and optimizes the purpose of the dialogue act, such as system response generation.

* Reinforcement Learning: a technique for training a machine learning system based on rewarding desired behaviors or punishing undesired ones.

Such method reduces the cost of calculation, which improves the performance of the system. LLM is a type of AI trained with extensive text patterns to generate a human-like response in the process of natural language processing. Before LLM was developed, fine-tuning required sharing the entire parameter used in prior learning. This method demands high cost of calculation and may trigger Catastrophic Forgetting.

* Fine-tuning: taking a model trained on a large dataset and partially retraining it on a smaller dataset that is more suitable in terms of style and content

* Catastrophic forgetting: the phenomenon of an artificial neural network forgetting previously learned information upon learning new information

Bang and Lee introduce their paper, “Task-Optimized Adapters for an End-to-End Task-Oriented Dialogue System” in the ACL conference handbook:

In this paper, we propose an End-to-end TOD system with Task-Optimized Adapters which learn independently per task, adding only small number of parameters after fixed layers of pre-trained network. We also enhance the performance of the DST and NLG modules through reinforcement learning, overcoming the learning curve that has lacked at the adapter learning and enabling the natural and consistent response generation that is appropriate for the goal. Our method is a model-agnostic approach and does not require prompt-tuning as only input data without a prompt. As results of the experiment, our method shows competitive performance on the MultiWOZ benchmark compared to the existing end-to-end models.

(Conference Handbook, 220)

“The 61st Annual Meeting of the Association for Computational Linguistics.” ACL 2023

“Just like how a human forgets old information when the person takes in too much new information at once, AI experiences similar phenomenon. Thus, we modified the LLM to only learn the necessary information by setting blocks in adapters formed of smaller parameters.”

- Namo Bang

“The current main topic of the natural language processing field is the application of the reinforcement learning. Reinforcement learning is already used to improve the dialogue system, but the side-effect is reinforcement learning lowers the performance of other task systems. The lecture on reinforcement learning I took during the second semester of my graduate course helped me upon completing the idea of applying adapter in LLM.”

- Jeehyun Lee

Utilizing Liberal Arts to Natural Language Processing

The paper is an example of utilizing the experience as liberal arts major to natural language processing. Bang double majored Big Data Science and Mass Communication and Journalism at Sogang University, and Lee majored Linguistics at Korea University.

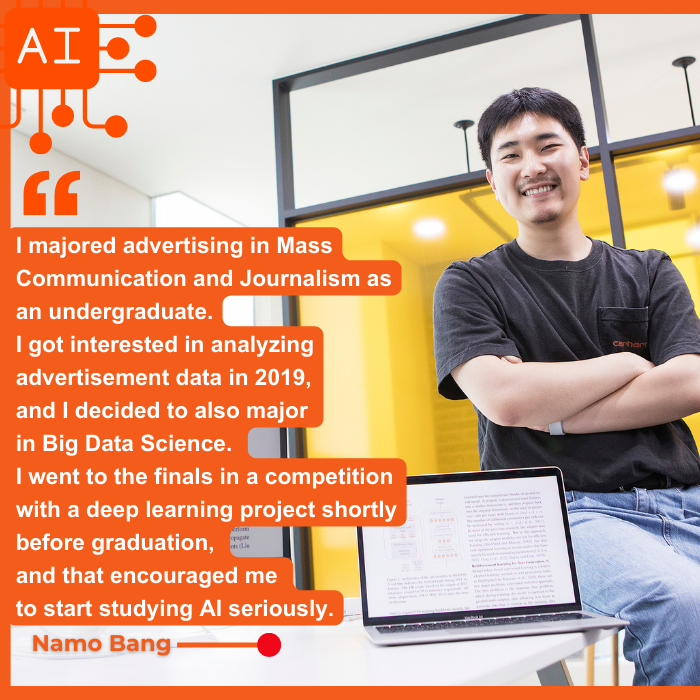

“I majored advertising in Mass Communication and Journalism as an undergraduate. I got interested in analyzing advertisement data in 2019, and I decided to also major in Big Data Science. I went to the finals in a competition with a deep learning project shortly before graduation, and that encouraged me to start studying AI seriously.”

- Namo Bang

“I took a Python and a natural language processing class while I was majoring Linguistics in college. It wasn’t difficult at the start, but I realized my lack of expertise in AI and coding compared to computer science majors. It was shortly before graduation when I discovered the Smilegate DHE course and decided to transfer.”

- Jeehyun Lee

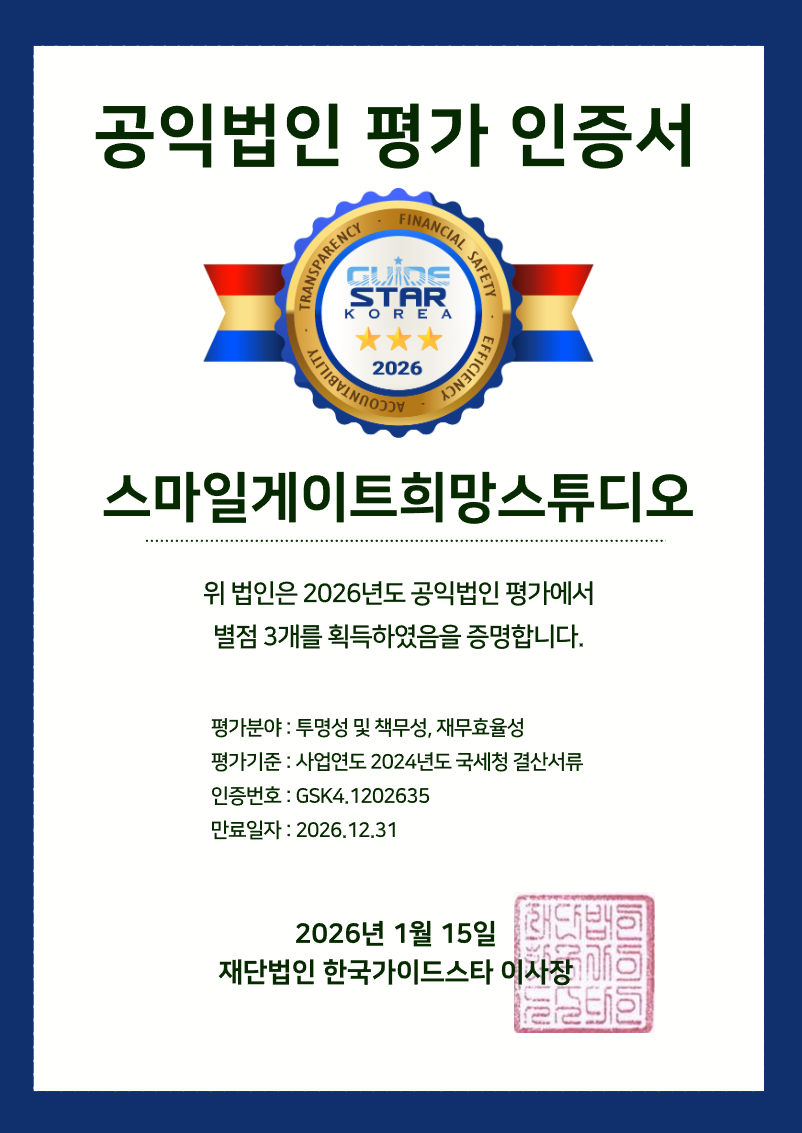

Opportunity to Grow Expertise in AI, DHE Scholarship

Bang and Lee are both beneficiaries of the Smilegate DHE scholarship. Ever since the two were selected as the DHE scholars, they got interested in natural language processing and did researches in the ISDS lab operated by professor Myoung-Wan Koo.

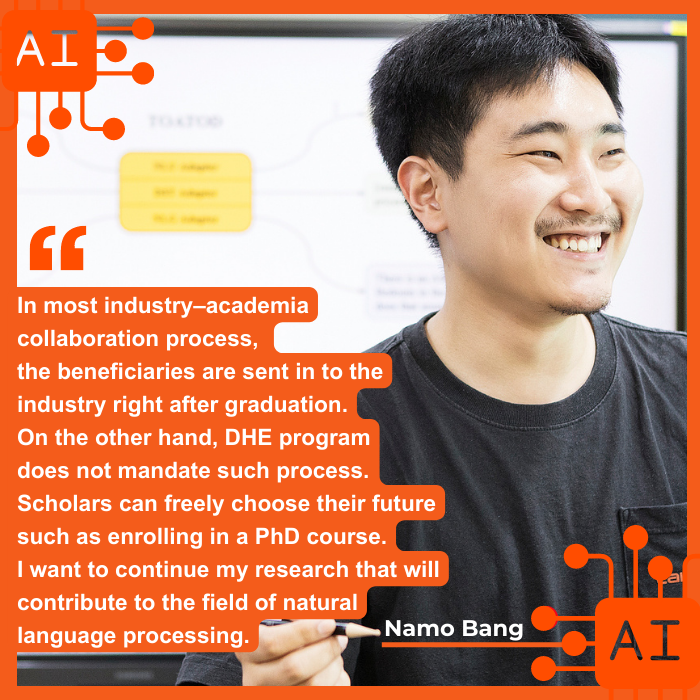

“In most industry–academia collaboration process, the beneficiaries are sent in to the industry right after graduation. On the other hand, DHE program does not mandate such process. Scholars can freely choose their future such as enrolling in a PhD course. I want to continue my research that will contribute to the field of natural language processing.”

- Namo Bang

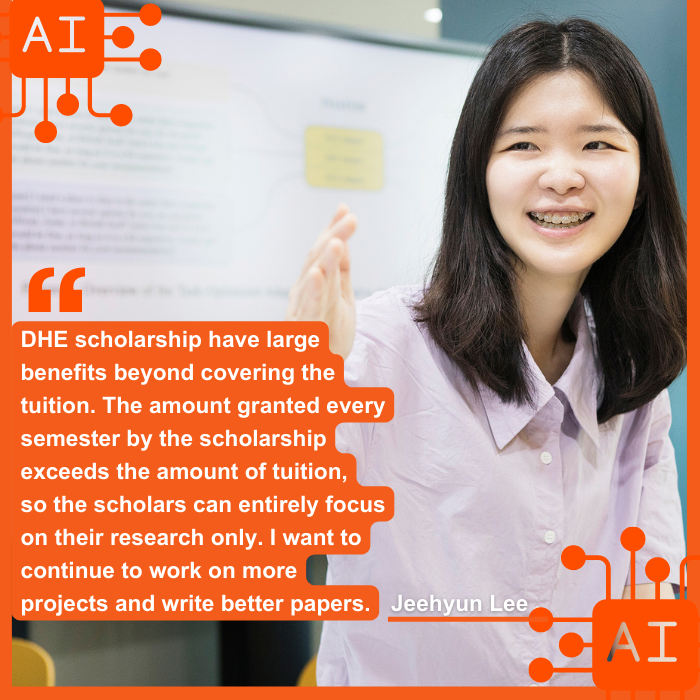

“DHE scholarship have large benefits beyond covering the tuition. The amount granted every semester by the scholarship exceeds the amount of tuition, so the scholars can entirely focus on their research only. I want to continue to work on more projects and write better papers.”

- Jeehyun Lee

Recently, the border between natural science and liberal arts is becoming progressively vague. As Bang and Lee have established themselves as successful examples of liberal arts majors in the field of natural science, it will be interesting to see what future accomplishments they achieve.

TOP

TOP